Prompt Templating

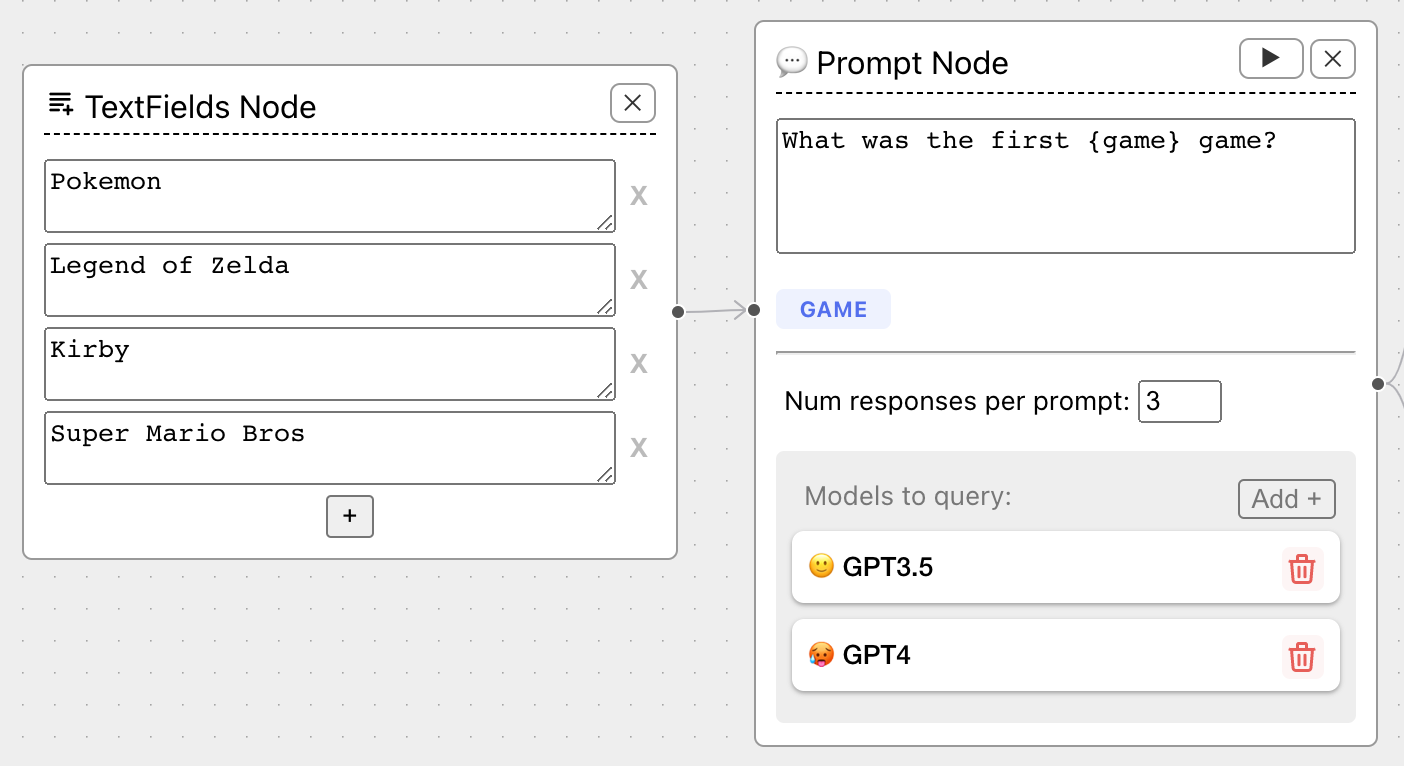

Prompt templates are ways to specify 'holes' in prompts that can be filled by values. ChainForge uses single braces {var} for prompt variables. For instance, here is a prompt template with a single variable, {game}:

The prompt template has created a handle, game, in blue. A Text Fields node is attached as input, with four values: Pokemon, Legend of Zelda, etc.

Prompt, Chat Turn, and TextFields Nodes support prompt templating.

Warning

All of your template variables should have unique names across an entire flow. If you use duplicate names, behavior is not guaranteed. This is because template variables may be used elsewhere down an evaluation chain, whether as implicit variables (see below) or in code evaluators.

Template chaining

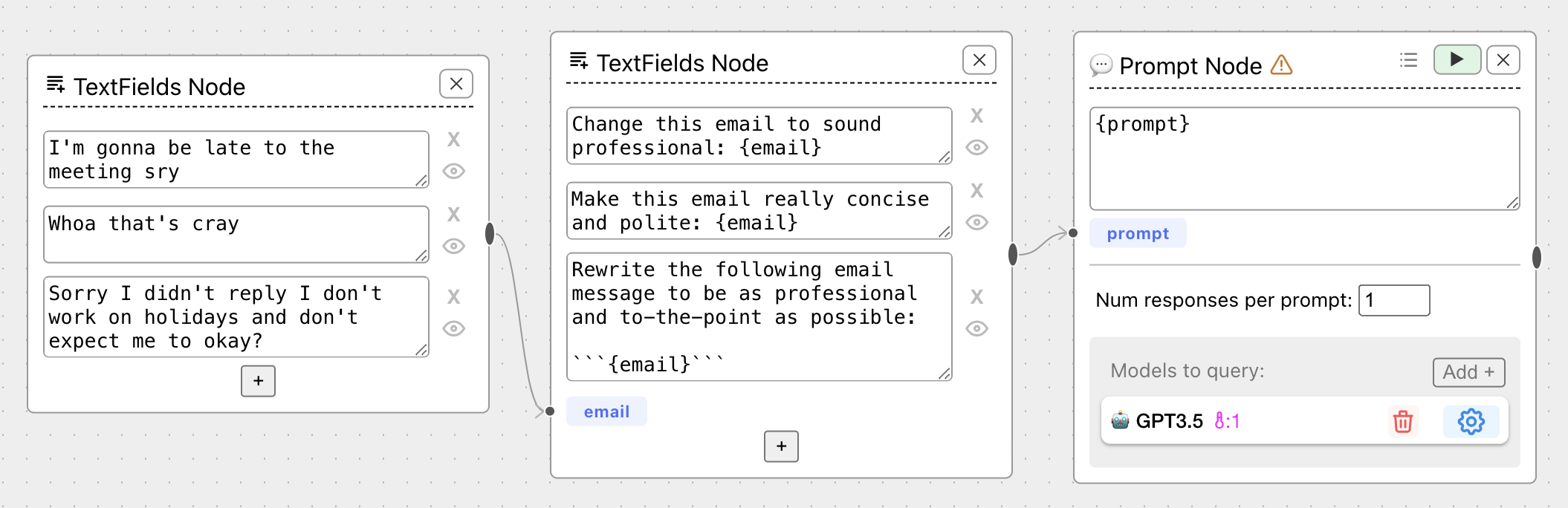

In ChainForge, you can chain prompt templates together using TextFields Nodes. This is useful to test what the best prompt template is for your use case, for instance:

All prompt variables will be accessible later on in an evaluation chain, including the templates themselves.

Rules for Generating Prompt Permutations

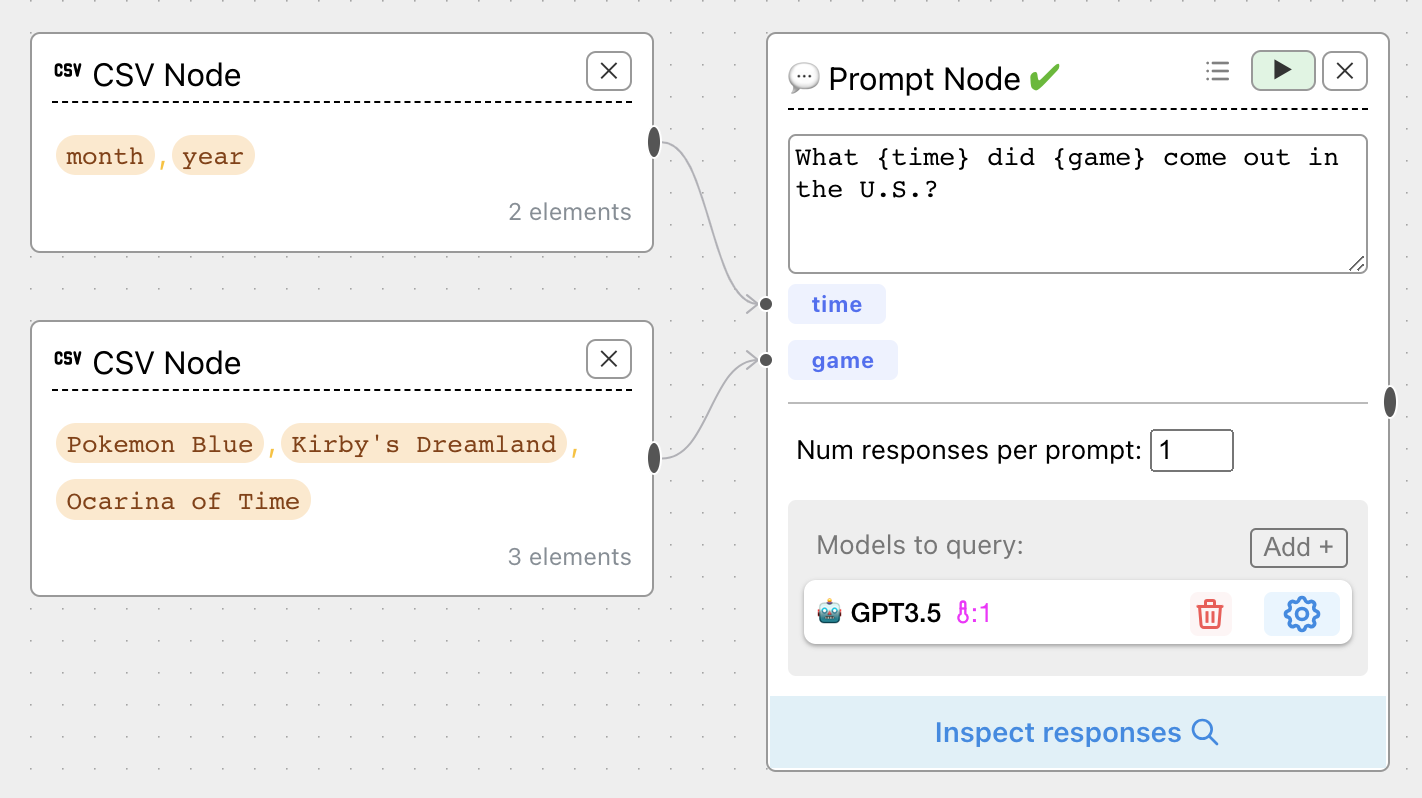

ChainForge includes power features for generating tons of permutations of prompts via template variables. If you have multiple template variables input to a prompt node, ChainForge by default calculates the cross product of all inputs: all combinations of all input variables.

For instance, consider the prompt template:

What {time} did {game} come out in the US?

Say time could be year or month, and game could be one of 3 games (Pokemon Blue, Kirby's Dream Land, and Ocarina of Time). Our setup now looks like:

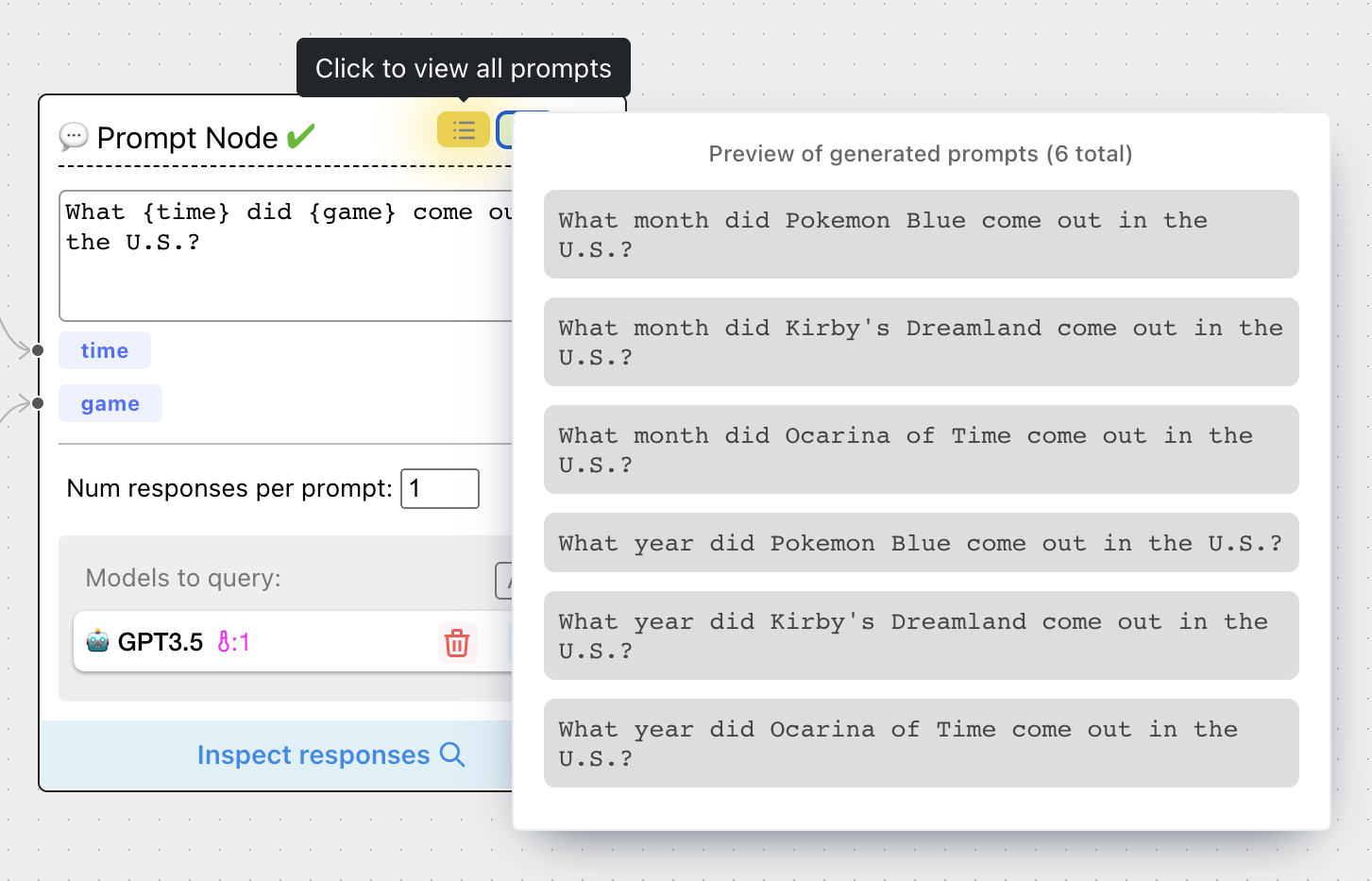

We have 2 x 3 = 6 combinations, which you can inspect by hovering over the list icon on the Prompt Node:

Press Run (the green play icon) to send off all these prompts to every selected LLM.

Associated variables "carry together"

There is an exception to the "cross product" rule: if multiple inputs are the columns of Tabular Data nodes, then those variables will carry together. This lets you pass associated information, such as a city and a country, defined in rows of a table.

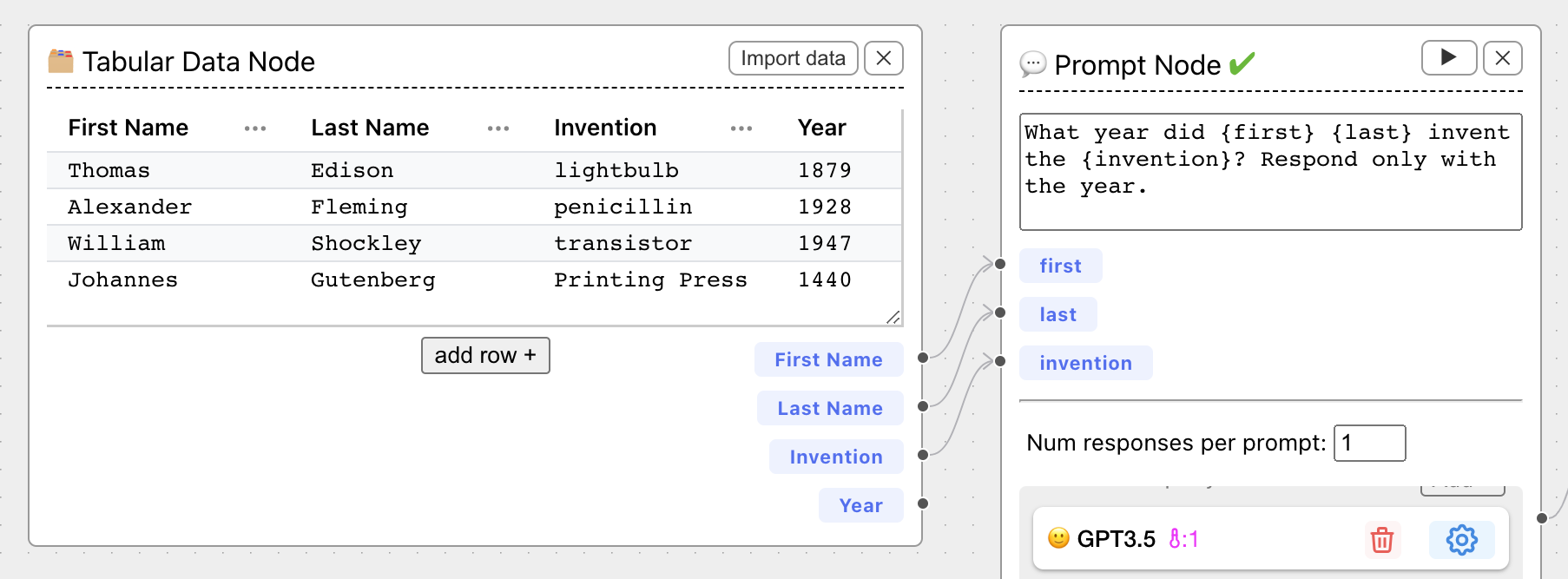

For instance, consider the setup:

Here, variables {first}, {last}, and {invention} "carry together" when filling the prompt template: ChainForge knows they are all associated with one another, connected via the row. Thus, it generates only 4 prompts from the input parameters.

Escape braces {} with \

You can escape braces with \; for instance, function foo() \{ return true; \} in a TextFields

node will generate a prompt function foo() { return true; }. You only need to do this for TextFields nodes,

as text fields may themselves be templates. Braces in Tabular Data and Items Nodes are escaped by default.

Special Template Variables

Settings variables

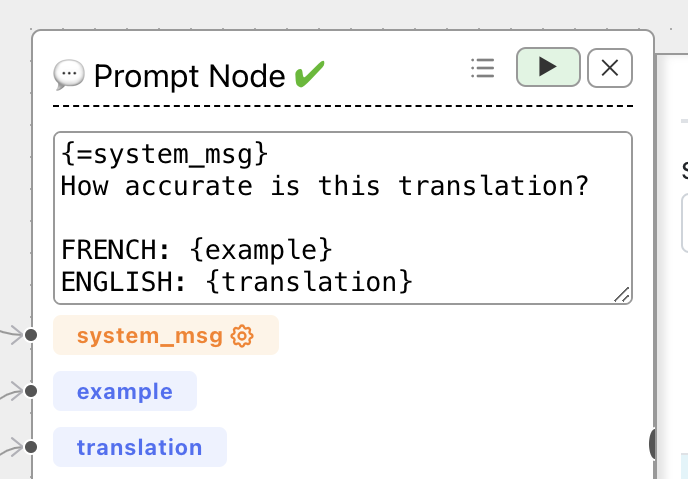

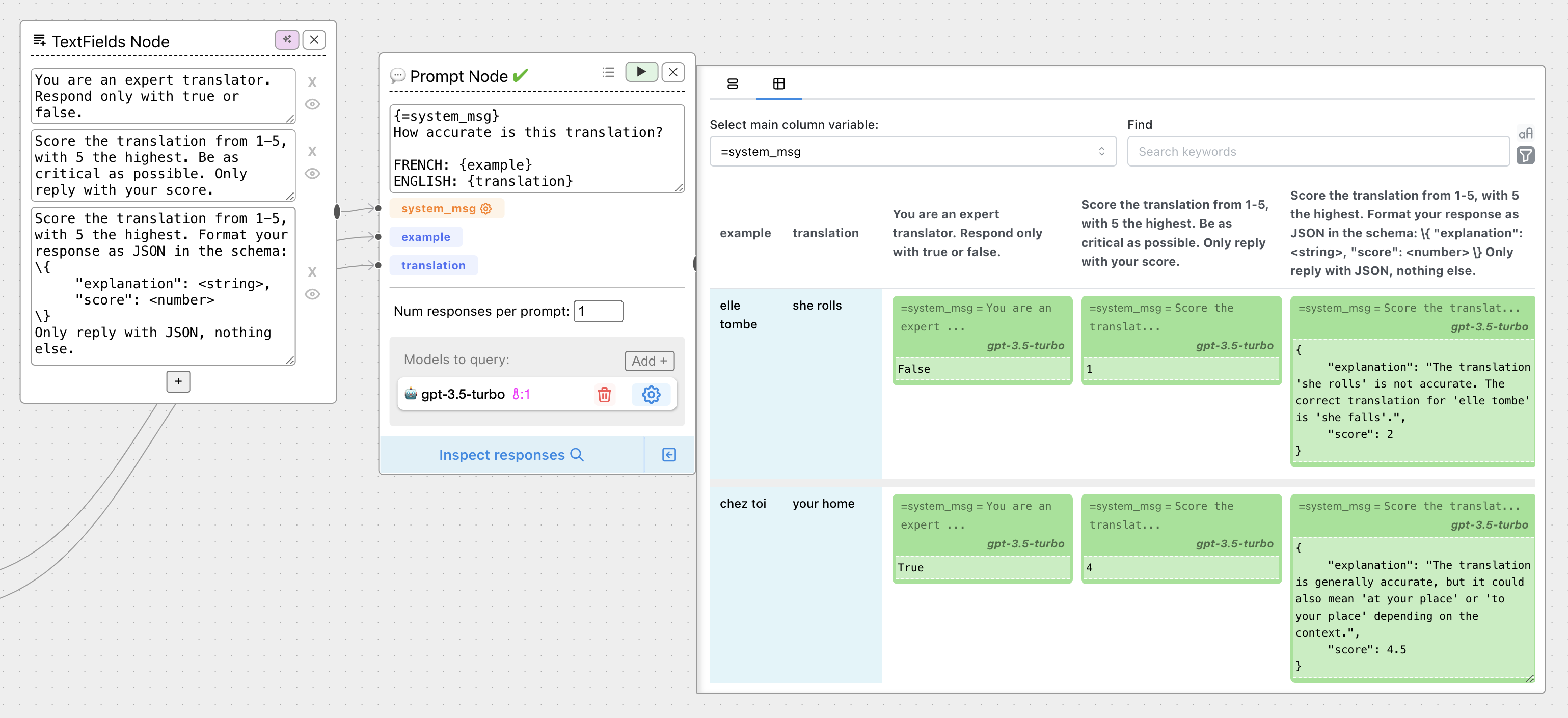

You may declare template variables of the form {=setting_name} to parametrize values for a model setting. A common use case is comparing system messages for OpenAI models. For instance, say we add GPT-4 and declare a settings template variable {=system_msg}:

An orange input handle has appeared, allowing us to connect input values just like normal prompt variables. Now we can compare across system messages:

Values for a settings variable like =system_msg are not used directly in the final prompt, but rather change the model parameters for that setting. (Whatever existing values that parameter is the model settings window will be overidden.)

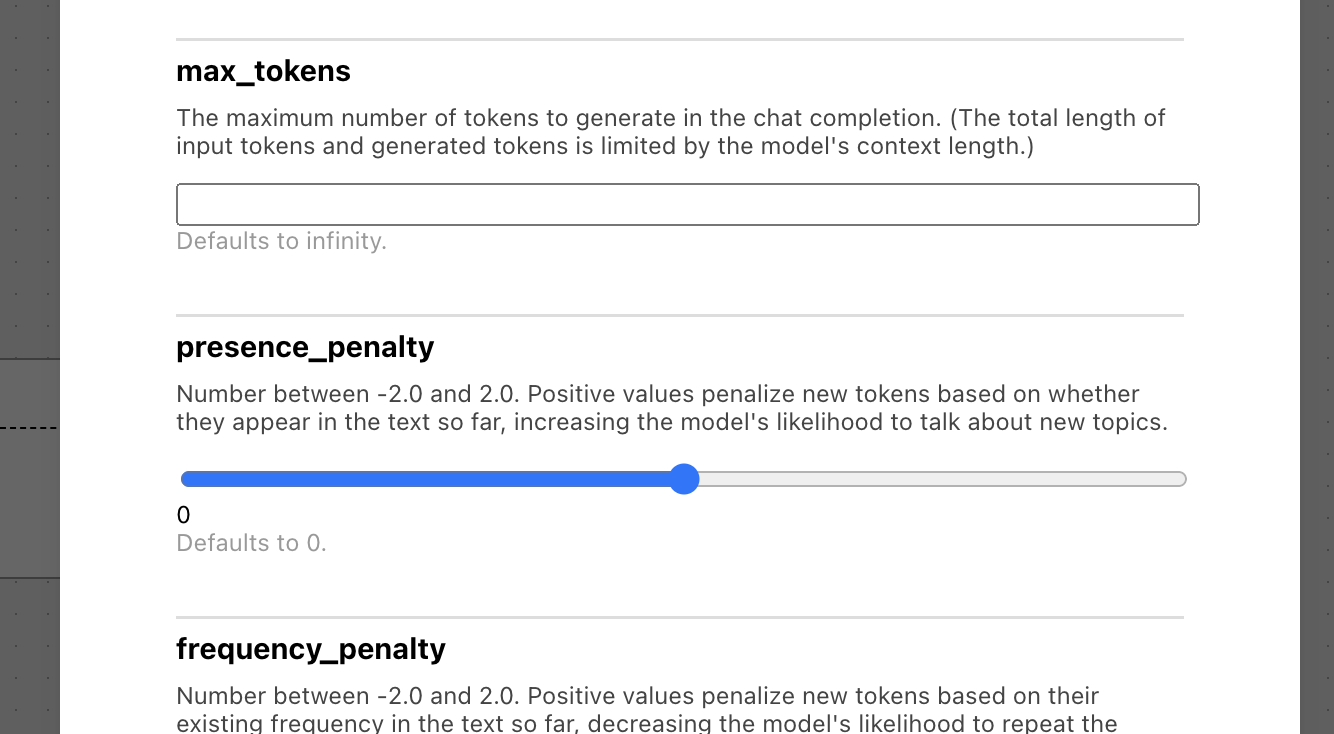

To find out what setting_name to use for a setting variable, open the Settings window for that model and observe the bolded titles:

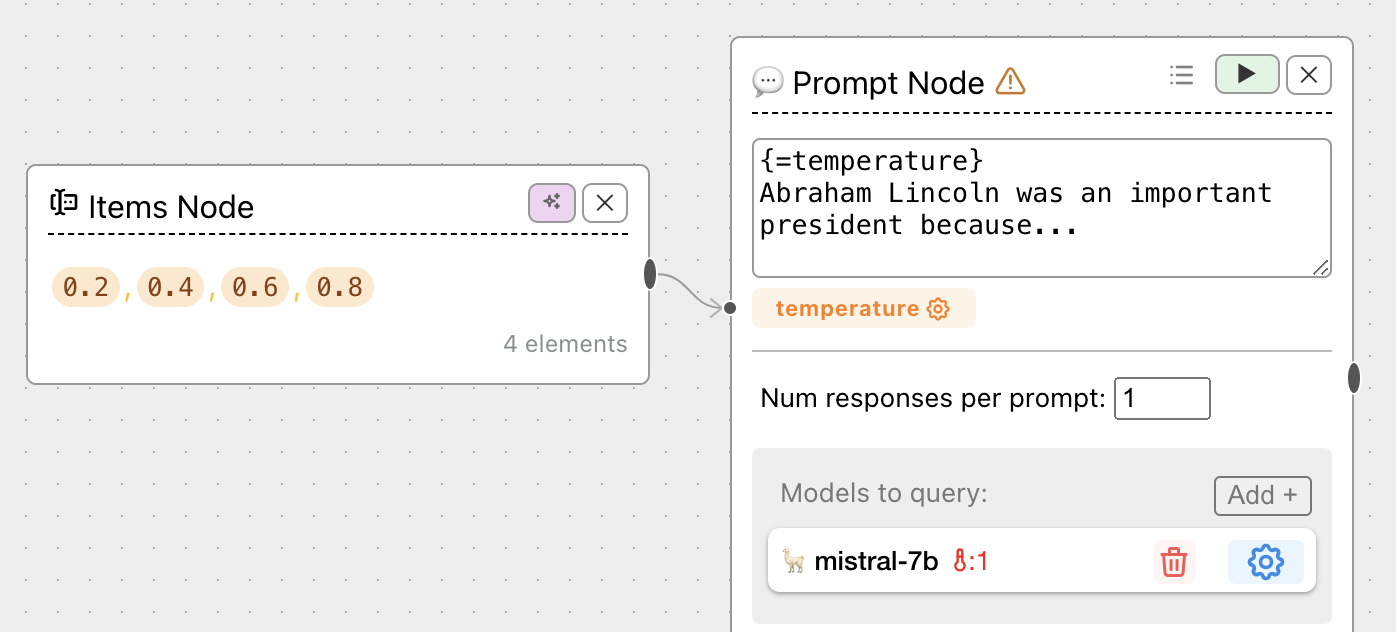

Here, we could use {=presence_penalty} in our prompt to compare across different presence penalities. Another common setting is {=temperature}, which lets you compare across different temperatures. In these cases, your inputs would be numbers:

Note that setting_name here must exactly match the name of the model setting you wish to parametrize, and it will be applied for all models in the Prompt Node.

Implicit prompt variables

Finally, you may use a special hashtag # before a template variable name

to denote implicit template variables that should be filled

using prior variable and metavariable history associated with the explicit input(s).

This is best explained with a practical example:

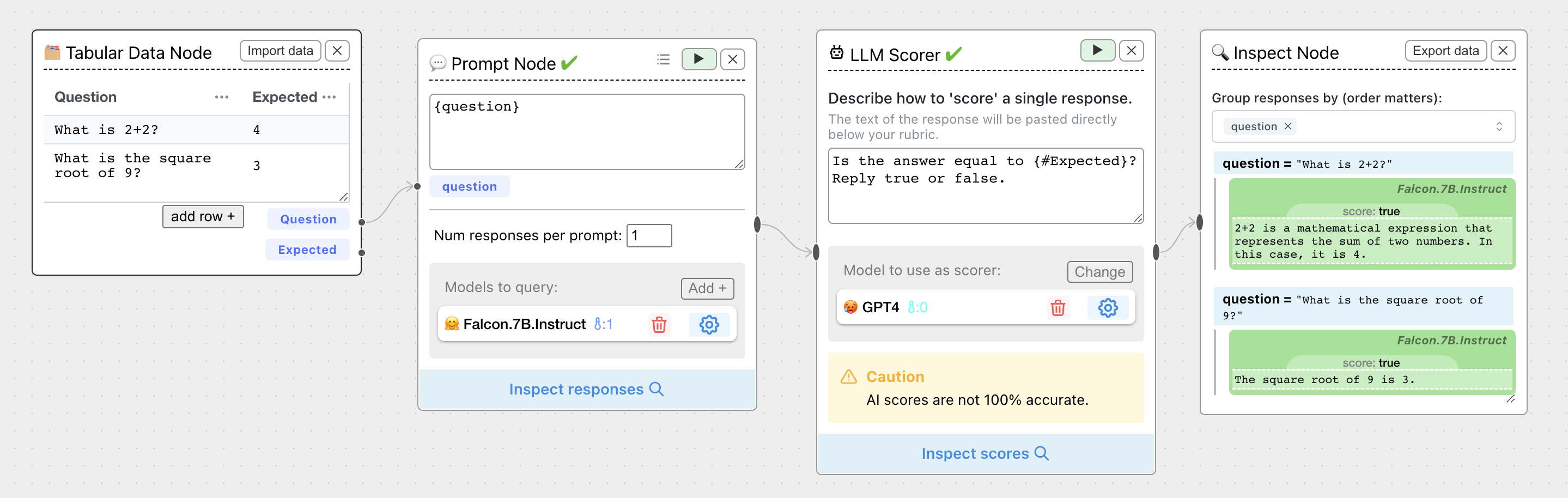

Here, we have a Prompt Node with an explicit input variable {question}. Because each input to {question} is drawn from a table row, each input

has an associated metavariable, the value of the column Expected. We can use this value in any later prompt templates via {#Expected},

even if they are further down a prompt chain. Here I've asked an LLM Scorer whether the answer was equal to the value in

the column named Expected, which is a metavariable associated with the prompt variable question.

In other words, think of the outputs of the Prompt Node as carrying metadata about their history:

| LLM Response | question (variable) | Expected (metavariable) |

|---|---|---|

| 2+2 is a mathematical expression that represents the sum of two numbers. In this case, it is 4. | What is 2+2? | 4 |

| The square root of 9 is 3. | What is the square root of 9? | 3 |

Notice that we also had access to {#question} in the LLM Scorer, which (if we had used it) would fill in with the value of question associated with each input.

See the section on Code Evaluators for more details on vars and metavars are, which underlie this functionality.